In this article, we will be looking at Digital Signal Processing. Put simply, this involves taking a signal and turning it into data. We will be focusing on DSP software, where the signal that needs to be altered has already gone through the conversion process and exists in a digital form as 1s and 0s. We will also look at what digital signal processing is, its different types, and how to use it.

What Is Digital Signal Processing (DSP)?

So, what is Digital Signal Processing? DSP audio takes an analog signal such as the electrical pulse from a microphone, converts it into digital data, and then applies mathematical equations to it. This means that when it is converted back into real life analog signal, it has changed desirably.

Many years ago, an engineer in a recording studio in Memphis, Tennessee was recording vocals to a song. His issue was that his tape recorder only had two tracks to record onto, and one of them was taken up by the band. He was struggling to get the voice to sound natural and decided it needed some echo. First, he tried putting the vocalist in the hall next door, but the hall was too reverberant. So, he put the vocalist back into the booth and placed a speaker in the hall, which played an amplified feed from the singer’s microphone.

He then placed another microphone into the centre of the hall to pick up the echoing vocal which fed back into his mixing console, adjusted by a fader, and the mic in the hall was then moved around to alter the nature of the echo. He then had complete control over how much reverberant vocals he would use in his mix. This signaled the birth of sound processing, which would go on to contribute to the development of digital signal processing or DSP.

Digital signal processing covers a wide range of functions. These range from recognizing your voice when you say “OK Siri” to monitoring the temperature or color in safety equipment. But it is the processing of sound signals that we will look at in this article. Audio engineers and producers often refer to DSP as Digital Sound Processing.

How Does It Work?

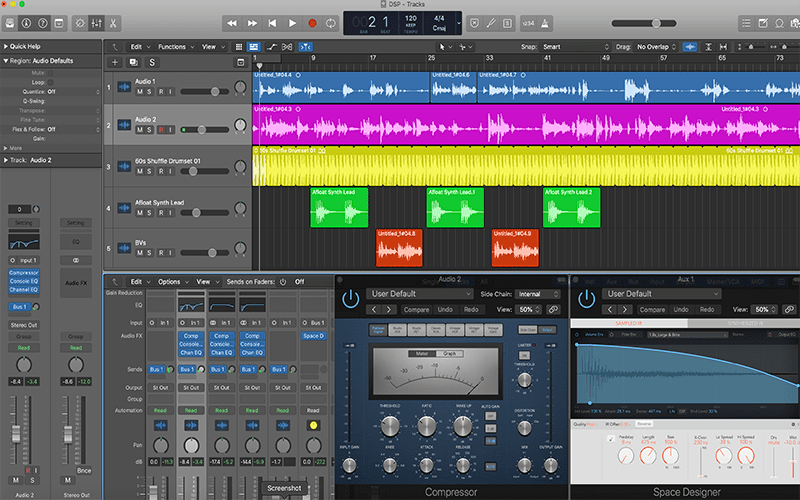

To the modern producer/engineer, DSP means plugins. Plugins are extra bits of software that can be called up by your audio sequencer. They can then be used to alter or manipulate the audio resting in the sequencer’s timeline. This is facilitated by another bit of software – an API. One of the most common is Microsoft’s VST (Virtual Studio Technology). Apple has its own AU (Audio Units) system, and there are also others such as Avid’s TDM or AAX. However, VST is the most common, so VST plugins usually represent the best value for money.

To start, I just need to give you a tiny bit of techie information. Every second of audio in your timeline is represented by 48,000 binary numbers. Each number consists of 24 digits (a ‘1’ or a ‘0’). DSP is developed by looking at a pure sound and how it is represented by binary numbers, then comparing them to the equivalent numbers once that audio signal has been processed desirably. The Digital Signal Processor simply recalculates the binary numbers it has read using an algorithm. This means that the resulting numbers, when turned back into sound, will resemble the desired effect.

Types Of Digital Signal Processor

In the days of analog recording studios, sound processing was roughly split between two types: Time-Based Processing and Dynamic Processing. Digital recording has adopted this precedent and the methodology that goes with it.

Time-Based Digital Signal Processing

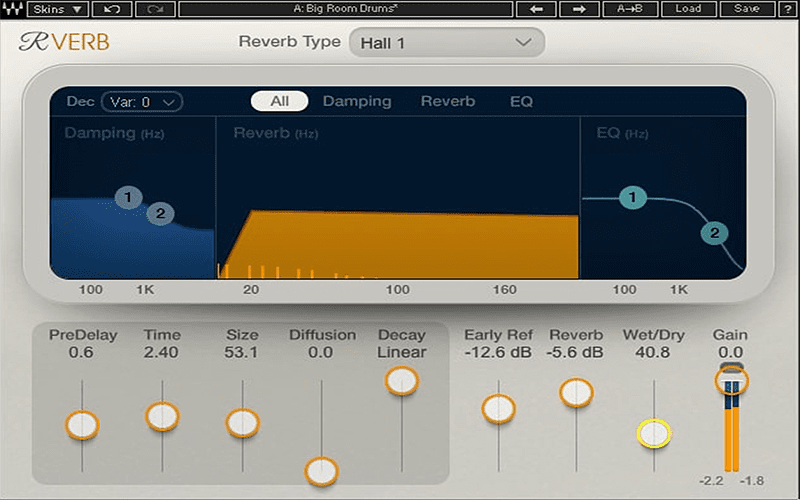

This type of signal processing involves altering the timing of certain elements in the audio signal, feeding it back into the mix through a controllable route (known as a bus). This is similar to our engineer in Memphis. These days, we have the luxury of plenty more audio channels, so we can apply these audio effects during playback. This gives us much greater control of the final mix. Reverb, delay and chorus fit into this category.

Reverb is one of the most common music plugins used by music producers. As a rule, I create a separate audio bus in my sequencer (a group channel) and place the plugin there. I then send my clean signal over to this new channel. This gives me a great deal of control over the audio signal and how it affects the final output. I can control how much I send over, how much I allow into the mix and all the settings in my plugin to alter the effect’s nature.

It also allows me to send several signals over to the one plugin, saving me from having to calibrate numerous plugins during a mix and placing less strain on my computer CPU. Tip: These days, most music producers use reverb sparingly and with short tails (the time it takes the reverb to die down).

Delay

The same principle can be applied to Delay. The Delay does exactly what it says on the tin – it replays whatever is fed into it at a predetermined time later (usually measured in milliseconds). It was a very popular vocal effect some years ago, as it thickened the texture of the voice and added a mystical feel. Now, it is employed often as a musical instrument in its own right, with weird and wonderful techniques applied to many audio sources, dropping in and out when needed.

The beauty of digital signal processing is that you can incorporate techniques that weren’t available to analog producers. In the case of delay, the plugin can be linked to the tempo of the track. After this, it is then altered to represent several musical values related to that tempo. So, for instance, a single-syllable vocal delivery can be fed back into the mix as a triplet over the original beat, or any other variation – all without having to get the scientific calculator out.

Digital Signal Processing: Delay

The chorus is another time-based digital signal processor still commonly used. This is often used to give the audio source a thicker feel, although it is not as lavishly used now as in the past. The chorus creates its effect by shifting the source audio by a few milliseconds, and then modulating that signal – varying the timing and amplitude by using another low-frequency signal. Again, originally used to thicken or enhance vocals and string instruments, producers and engineers soon discovered that it could be applied to almost any sound source to achieve interesting results, particularly stringed instruments.

Remember, with all of the above, your mix will benefit from the DSP plugin resting in a separate channel and having the original (dry) signal sent to it This is sometimes known as ‘Sidechain’. This method is in stark contrast to the use of Dynamic Digital Signal Processing.

Dynamic-Based Digital Signal Processing

With dynamic DSP, you affect the signal by passing it through the DSP plugin. The plugin changes various aspects of the signal’s dynamics – amplitude, frequency band, etc – as it passes through. A common form of dynamic DSP in music production is compression and, unlike the time-based effects mentioned above, compression is used more these days than ever before.

Compression

So, how does compression work?

Imagine you had a piece of string on a pulley system running from the front door to the volume control of your stereo. Imagine that you are 15 years old and are playing the stereo too loud while your folks are out. As your parents open the front door, you need that stereo to turn down quickly, and thanks to your string-based genius, it does.

That is the principle of compression. It is an automated volume control that will reduce the amplitude of your signal if it goes too high and lets it play unhindered when it isn’t above a certain level. You set a level (threshold), and as the signal passes the threshold, the compressor will act on anything above it. You can also vary how the compressor reduces the level, firstly by adjusting the ratio, which determines how much signal is reduced. For example, if the audio signal measures 6db over the threshold and your ratio is set to 3:1, then only 2db of the signal will be allowed over the threshold level. You can also adjust how soon the compression kicks in, how smoothly it will act on the signal and various other settings.

Digital Signal Processing: Compression

The result is audio that will sit better in your mix, as it isn’t fluctuating from low to high and back again. It also changes the texture of the audio signal, often giving it more impact on the set level, with an extreme example of this being DJ’s voices on mainstream commercial radio stations. The non-technical term often used is “in-your-face”.

Compression came into its own when audio engineers were recording onto tape. Tape needed a high input level to record at optimum quality. The danger of high input comes when there is an unexpected spike, e.g. when the singer blasts out a phrase or screams. The compressor was in line with the signal and controlled these spikes so the signal didn’t overload the tape. Although no longer needed, some engineers still use this method.

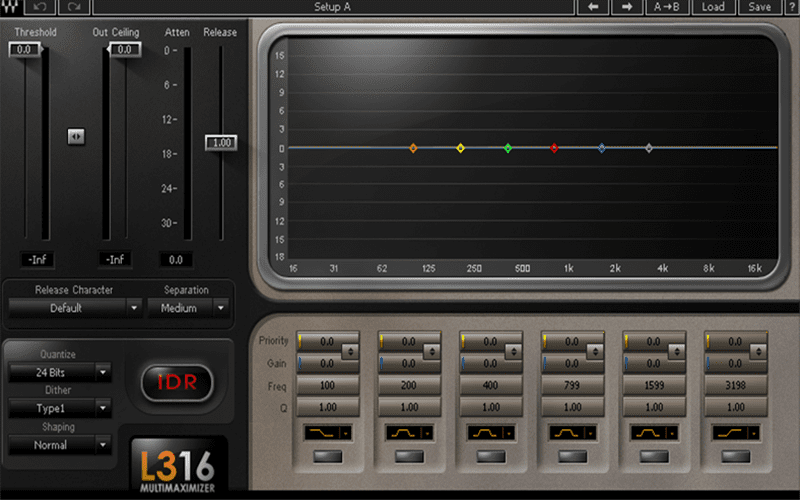

Limiters

Limiters are also playing a greater part in modern music production as they become more and more sophisticated. A limiter is like a compressor that acts much harsher and quicker and is used to boost the perceived signal level without it crossing over the dreaded 0db maximum or controlling a highly fluctuating sound source.

EQ

EQ, or Equalization, is another well-used digital signal processor. It started life as a filter that would reduce (attenuate) certain frequencies. These days, they can attenuate and boost and can be set to cover a very wide or very narrow band of frequencies. The most used feature is probably the High Pass Filter or HPF. It gradually reduces lower frequencies starting from around 75hz down to 0, allowing higher frequencies to pass.

Modern EQ plugins provide all sorts of control, allowing you to pinpoint exact frequencies and any breadth of frequency band you would like to cut or boost. I have two tips here.

- Always consider using an HP filter. Lower frequencies are sometimes hard to spot, but they can still get in the way of the upper band, confusing your mix decisions.

- Try to get into the habit of attenuating rather than boosting. It helps avoid level problems further into your project.

Of late, EQ has been superseded in certain cases by Dynamic EQ. This is a combination of Compressor and EQ where compression can be applied to different bands of frequencies.

Conclusion

There are now thousands of audio plugins to choose from. Above are examples of a few of the most common. As digital technology advances, the possibilities in terms of what the modern producer can do are practically limitless; some good, some not so.

While delving into this ever-expanding smorgasbord of goodies, it is worth remembering that the guidelines for Digital Signal Processing didn’t come from old analog techniques by accident. Those hard-earned principles can give you a good grounding. As with all aspects of music production, it is best to learn the rules before you break them. Therefore, I hope this has been a helpful starting point.

So, now that you have expanded your knowledge on DSP to use in your music production, we want to help get those tracks noticed by promoting your music and improving your streaming presence! Also, check out our Sync opportunities page to get your music in TV and Film.